The Great AI Power Crunch

Chart of the Week #77

Last week, I wrote about the AI bubble—(catch up here, and here)—how the technology is real but the valuations are absurd, and how the smart bet isn’t on picking which AI company wins, but on the one thing every single one of them desperately needs: energy.

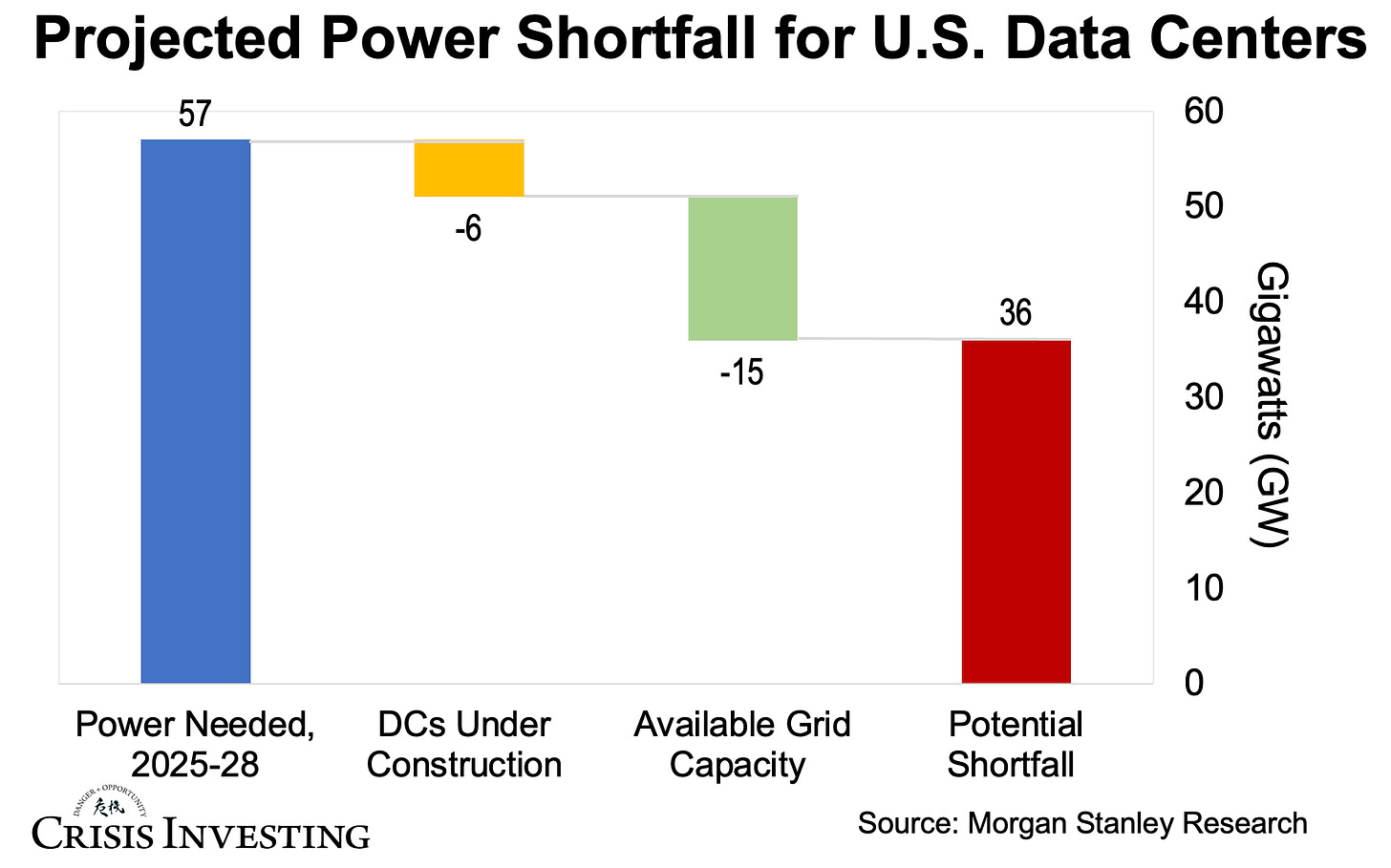

Today’s chart drives that point home. Take a look below.

What you’re seeing is a massive supply–demand imbalance—the kind that has opportunity written all over it for energy investors.

Between 2025 and 2028, U.S. data centers are projected to demand roughly 57 gigawatts (GW) of power. That’s an enormous amount of electricity. To put it in perspective, that’s enough to power about 45 million U.S. homes—roughly a third of all households in the country.

Now look at what’s available. Data centers currently under construction will add about 6 GW. The grid has roughly 15 GW of capacity that can be tapped without waiting years for upgrades. Put those together and you get 21 GW.

But even after squeezing every watt from new builds and the existing grid, we’re staring down a 36-GW shortfall (57 GW of demand minus 21 GW available). That’s the big red bar in the chart above.

So what’s driving this?

Like I told you last week, AI is an energy hog. Every ChatGPT query consumes 3 to 30 times more energy than a simple Google search. It’s like every question you ask burns a teaspoon of oil, or something in that ballpark in energy terms.

That’s because AI systems don’t just pull a result from a database. They run on specialized, power-hungry hardware inside massive data centers, chewing through enormous amounts of data in real time. A single AI server rack devours 80 kilowatts—roughly 16x what a traditional server needs. Multiply that by thousands of racks across hundreds of facilities, and you start to see why we’re headed into a massive crunch.

And that crunch isn’t easing—it’s only getting bigger.

Which brings me to this.

Morgan Stanley—one of the big Wall Street shops—put out these projections about a year ago. I grabbed the chart at the time and then let it sit on my desktop for months. And today, when I finally went to use it, I double-checked the numbers—turns out they’ve already updated them.

Morgan Stanley now expects U.S. data centers to consume 65 GW, not the 57 GW shown in the chart above. That pushes the shortfall to 45 GW—around 25% more than the first projection.

That’s a huge supply gap. Those 45 GW don’t magically appear—they have to be generated, built, or taken from someone else.

This is why I keep saying the real AI play isn’t trying to guess who builds the best chatbot. It’s owning the energy infrastructure they all rely on. ChatGPT, Gemini, Copilot, Grok—they all run on electricity. And it looks like they’ll need absolutely massive amounts of it.

Enjoy the rest of your weekend,

Lau Vegys

P.S. The coming energy buildout is exactly why a portion of our Crisis Investing portfolio is focused on energy companies positioned to benefit from America’s push to double electricity generation. These picks—many of which Doug himself owns—stand to profit regardless of which AI companies survive the shakeout.

All this is happening at the same time the governments of the world want to rely upon electricity as the ONLY power source!

An EMP event would be a blessing in disguise. Jk.